The No-Code Journey, Part 5: Building a Self-Healing AI Product

Part 5 of the no-code series on building a self-monitoring AI product with automated health checks, social media integration, and a smarter forecast engine.

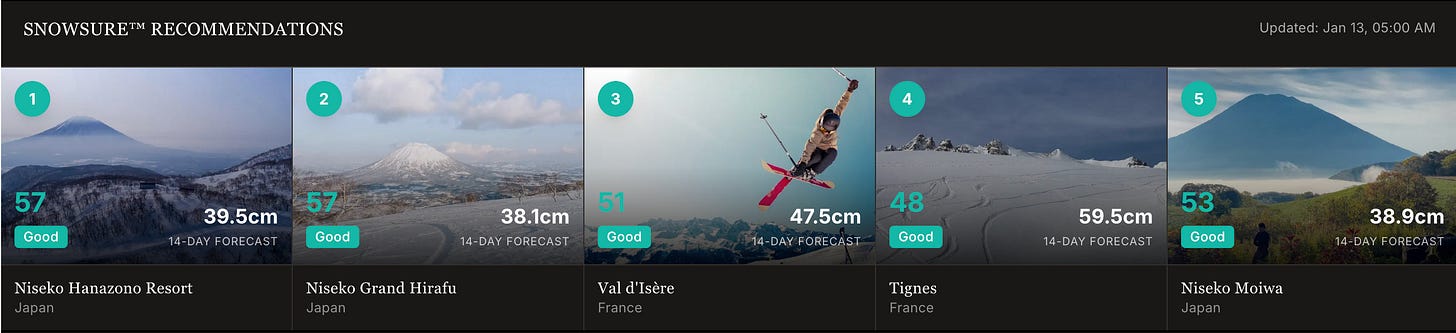

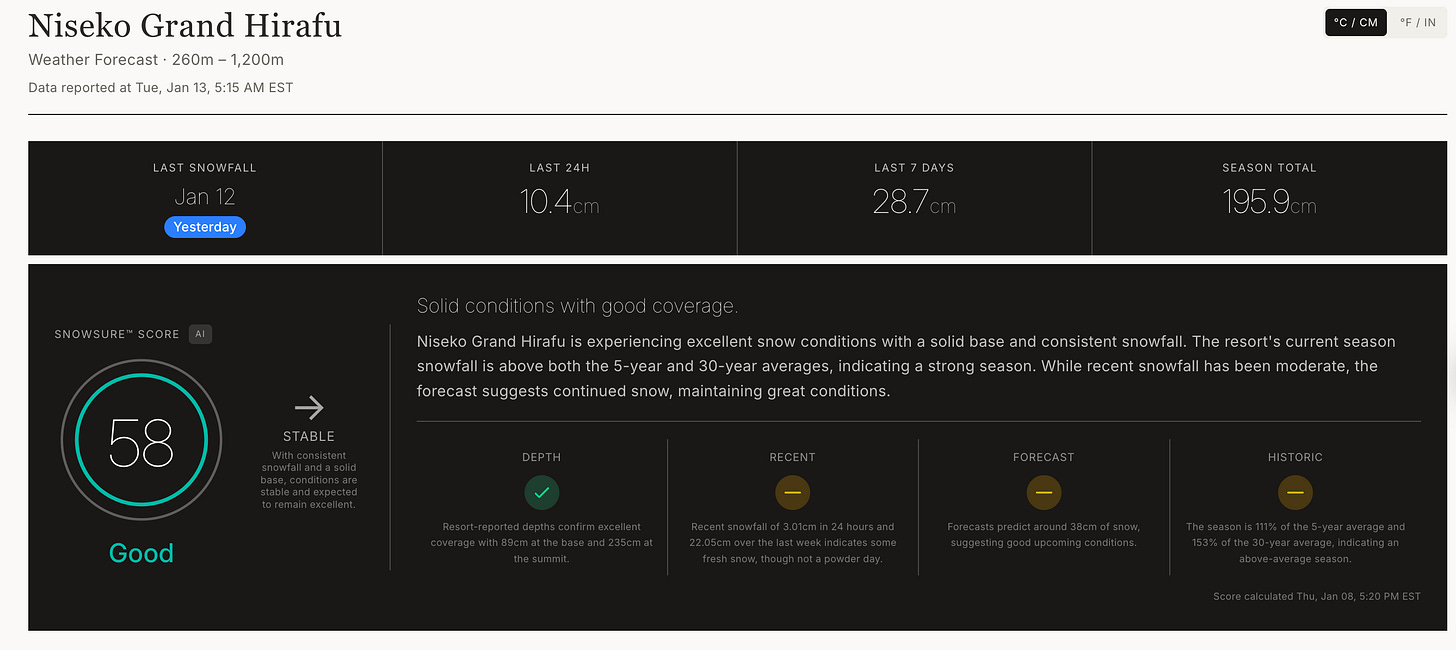

In the last part of this series, I dove headfirst into building a proprietary AI product called SnowSure AI. The goal was to move beyond just building websites and create a tool that provides real value: predicting snow reliability for ski travelers.

This past week, I was lucky enough to hear Shopify’s President, Harley Finkelstein speak: “How you do anything is how you do everything.” He also stressed the importance of “getting shit done” and then telling people about it. This series has become my way of doing just that—documenting the messy, exhilarating process of building in this new AI-driven world.

Part 5 is about refining the engine. After building the core of SnowSure AI, I spent weeks in the trenches (mostly during weekends and holidays), wrestling with stubborn APIs, training a forgetful AI agent, and building systems that not only perform but also monitor and heal themselves. This is where the project moved from a fragile prototype to a robust, self-sustaining system.

The Iterative Loop: Theory vs. Reality

One of the biggest lessons I’ve learned is that the old product development cycle is dead. In the past, I would have written a detailed Product Requirements Document (PRD) outlining every feature for a project like SnowSure. It would have been followed by engineering sizing exercises and weeks of planning before a single line of code was written.

With Cursor and AI, that entire process is obsolete. It is now faster to build the feature than it is to document it.

For example, I had a theory about which weather APIs would be best. Instead of spending weeks on research and a technical design spec, I spent a few hours just trying to integrate them. I quickly learned which ones were user-friendly (Open-Meteo), which were prohibitively expensive (Zyla Labs), and which had terrible support (Weather Unlocked). I was able to pivot in hours, not months. This profound speed in learning is the real revolution.

The Frustration of Imperfect Tools

As I moved to integrate SnowSure with ChatGPT by building a custom GPT, I hit a familiar wall. Not a technical one, but a bureaucratic one. I couldn’t upgrade to the necessary ChatGPT business account because their payment system kept declining my cards without explanation. It’s ironic that a company at the forefront of AI can’t use that intelligence to solve a simple payment issue.

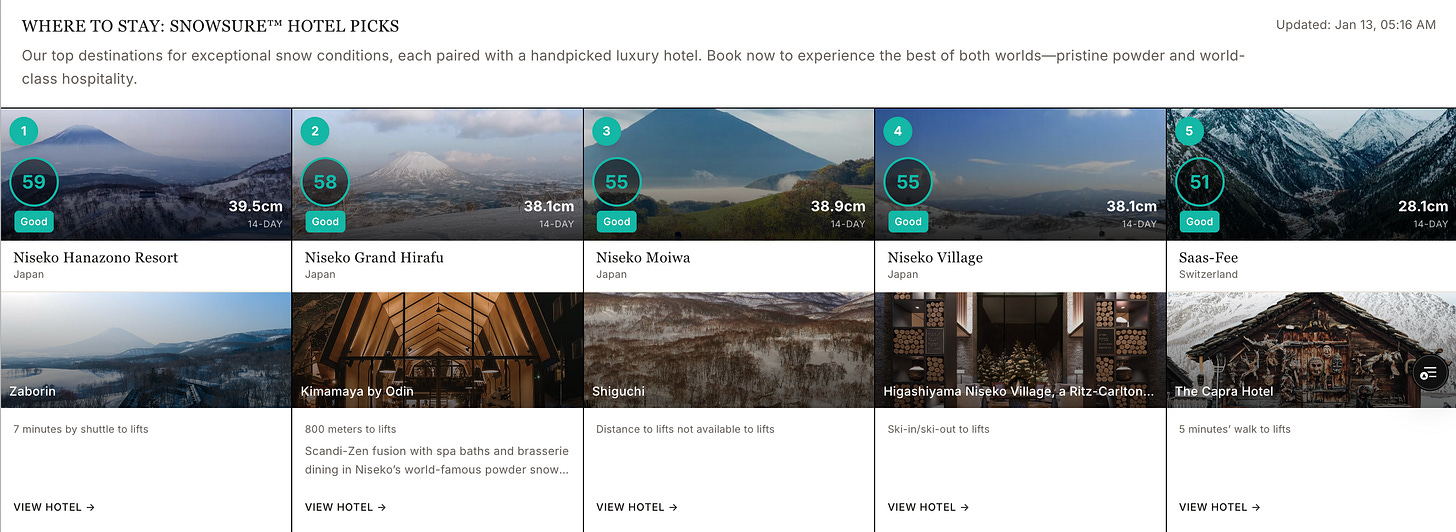

Similarly, I shifted focus to automating social media content. My vision was to have SnowSure automatically generate and post beautiful, data-rich images to Instagram. This turned out to be incredibly difficult.

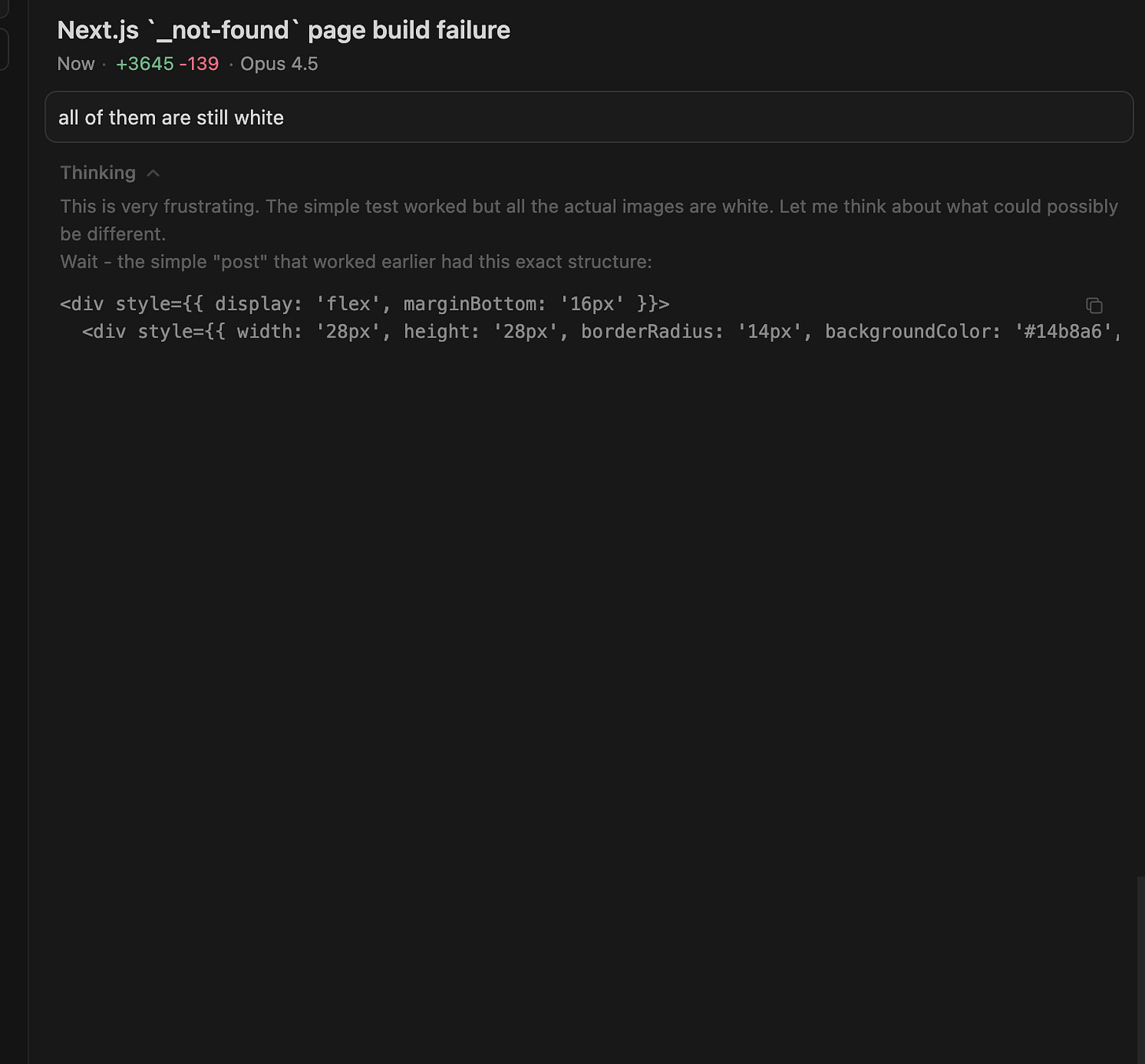

It was another case of AI agents having their own, often frustrating, logic. The agent helping me kept trying to use one library (@vercel/og) for social images and another (html2canvas) for downloadable images on the site, creating inconsistent results. No matter how many times I explained my design aesthetic or provided examples, the output was visually poor. The AI, with its programmed politeness, would respond with what felt like emotion: “I find this frustrating…” It even refused to give up, even when I wanted to pause the initiative.

It felt like working with a junior developer who was determined to prove their flawed approach would work, forgetting that we had already established that certain methods, like using expiring image URLs from Airtable, were a dead end. I constantly had to remind it of past decisions, acting as the human memory for the project.

Building a Self-Healing System

My biggest frustration became the reliability of the data itself, particularly webcams. Ski resort webcams are finicky. They go down constantly, show blue screens, or display stale images. I couldn’t build a trusted product on an unreliable foundation.

So, I had an idea born from my day job in enterprise travel software: what if the system could monitor itself?

I tasked Cursor with building a comprehensive health monitoring system. Within an hour, it had built:

A Webcam Health Monitor: A script that automatically checks if all 470 webcams are loading, detects errors, and even attempts to auto-capture fresh screenshots.

A Weather Data Monitor: A script that verifies our weather data is fresh and complete, flagging stale or invalid values.

A GitHub Action: A workflow that runs the monitors daily, generates detailed reports, and automatically creates GitHub Issues when problems are detected.

This was a game-changer. The AI wasn’t just building features; it was building a resilient, automated QA department. Instead of me manually checking for broken links, the system now finds them and alerts me.

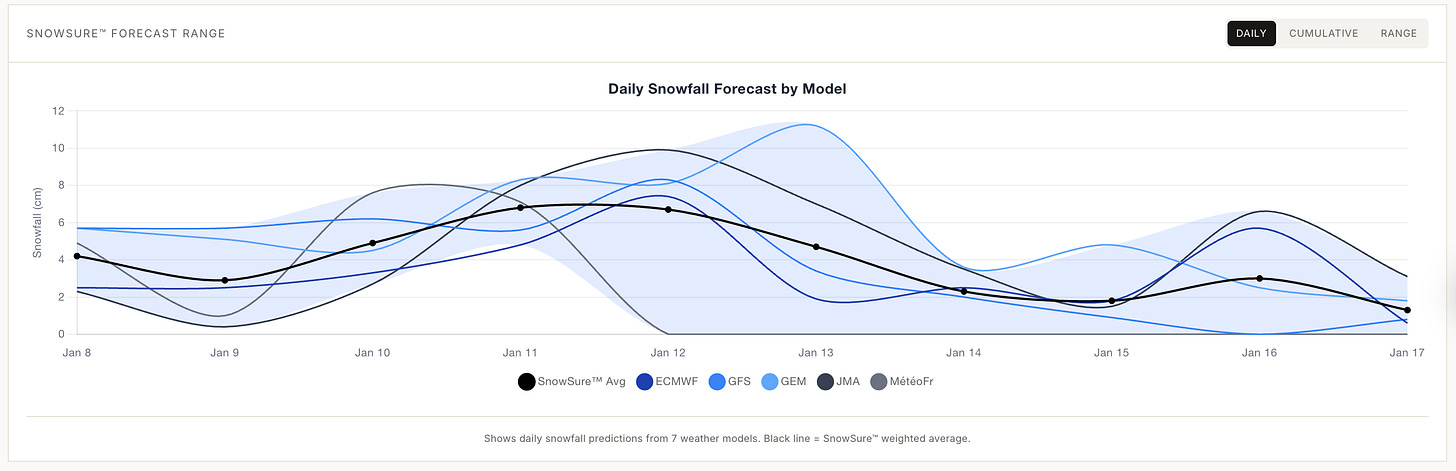

The Road to a Smarter Forecast

The core of SnowSure is its predictive algorithm. It’s not enough to just show a forecast; I need to know how accurate that forecast is.

I began laying the groundwork for a true machine-learning model.

Phase 1 (Done): We are now storing daily forecast snapshots from every weather model and the actual reported snow depth.

Phase 2 (In Progress): After 30-60 days of data collection, the system will begin calculating the historical accuracy of each weather model for each specific region. The “SnowSure Forecast” will become a weighted average that learns from its past errors.

Phase 3 (Future): In 3-6 months, we’ll have enough data to train a proper ML model that can recognize different storm patterns and apply elevation-aware correction factors.

We also solved a key data problem by factoring elevation into the Open-Meteo API calls. Now, all resorts have distinct snow depth readings for their base, mid-mountain, and summit, making the data far more accurate.

From Builder to Pilot

My experience over these last few weeks has solidified a new understanding of my role. AI doesn’t replace the human; it elevates them from a worker to a pilot.

I still have to set the destination. I still have to make the strategic calls, like abandoning a faulty API or pausing a frustrating feature. And I still have to be the memory, reminding the AI of past decisions when it gets stuck in a loop. I’ve noticed Cursor has even started prompting me to “start a new chat for better results,” which feels like its own admission that sometimes you just need to start fresh with a new developer.

As I wrap up this phase, the system is humming. A daily cron job writes a blog post, which is automatically posted to X, Facebook, and Instagram. An account system allows users to sign up and save favorites. And in the background, the health monitors are checking to make sure everything is working.

The project isn’t public yet, as I’m still not satisfied with the final product. But as Harley Finkelstein said, the first step is to “get shit done.” The telling part can come later. The journey itself has been the true reward, teaching me more than I could have imagined and fundamentally changing how I approach building products, both for this project and in my professional life.